When fitting a model to some training dataset, we want to avoid overfitting. A common method to do so is to use regularization. In this article, we discuss the impact of L2-regularization on the estimated parameters of a linear model.

What is L2-regularization

L2-regularization adds a regularization term to the loss function. The goal is to prevent overfiting by penalizing large parameters in favor of smaller parameters. Let be some dataset and the vector of parameters:

Where is an hyperparameter that controls how important the regularization

The effect of the hyperparameter

Increasing the hyperparameter moves the optimal of closer to , and away from the optimal for the unregularized loss .

This can be visualized using one feature and a dataset made of one sample . Take (for instance) the following loss:

The regularization term is:

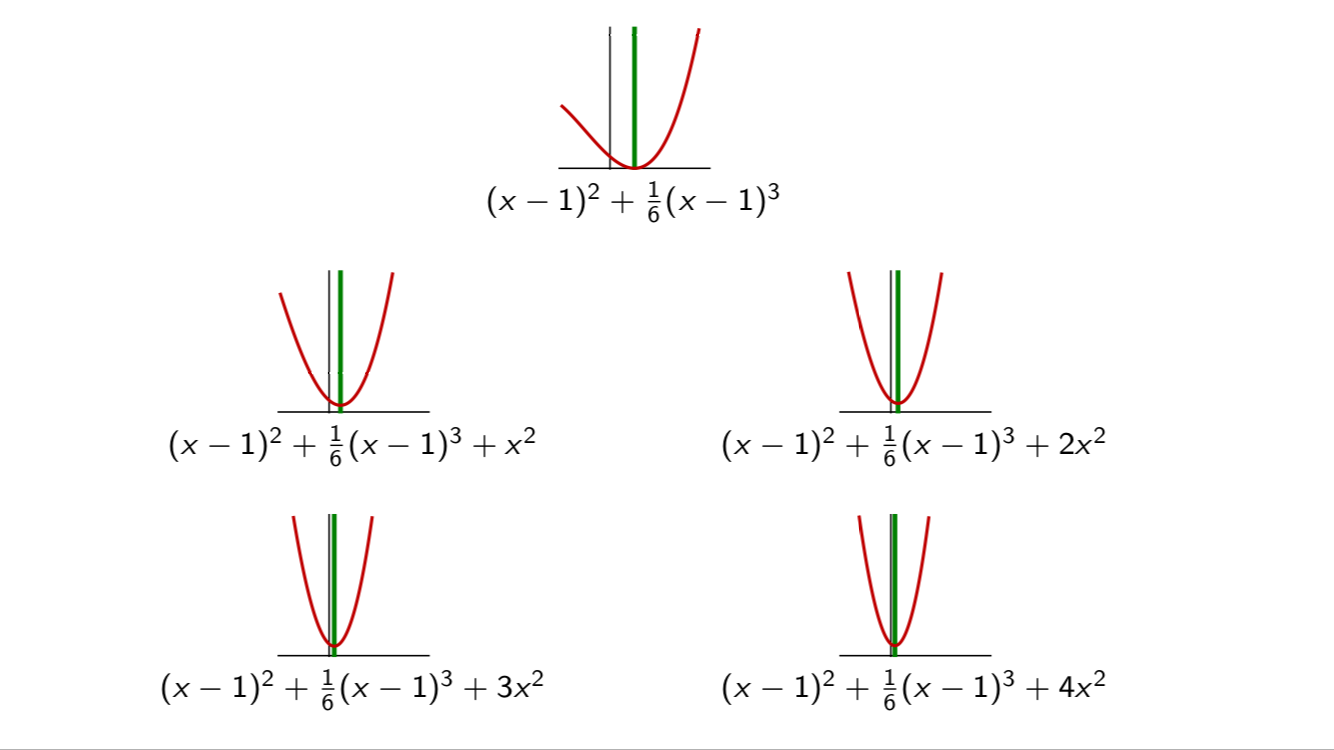

On the graph below, we plotted this loss function (first graph) and several variant of the corresponding for different values of . As the value of increases, the loss curve is translated towards .

This can be vizualized in 2D, where we see that the optimum of the regularized loss approaches more and more when increases. However, there is another way to conceptualize the regularization, which we will present next.

Regularization seen as constrained optimization

To understand how L2-regularization impacts the parameters, we will use an example in .

Let’s note the vector of parameters.

Our estimate is:

Which is equivalent to a constrained optimization problem:

| minimize: | |

| subject to: |

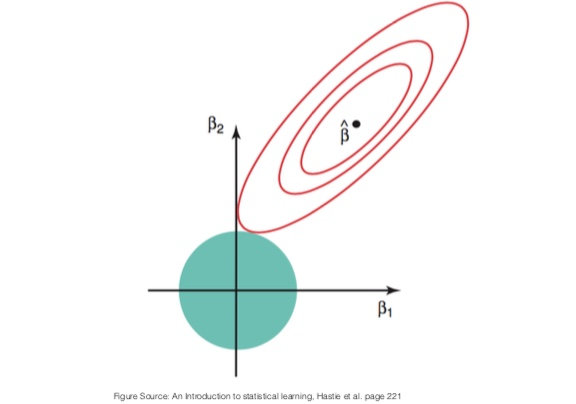

This formulation is easier to interpret: the selected vector of parameters is the vector that minimzes the loss, among all vector inside the ball of radius .

This is illustrated on the picture below. The red contour lines are the contour lines of the loss function . The unregularized optimal is indicated by a black dot at the location of the minimum of . The ball of radius is drawn in blue. The solution to the constrained optimization is the intersection of the contour lines and the ball.

Effect on the individual parameters

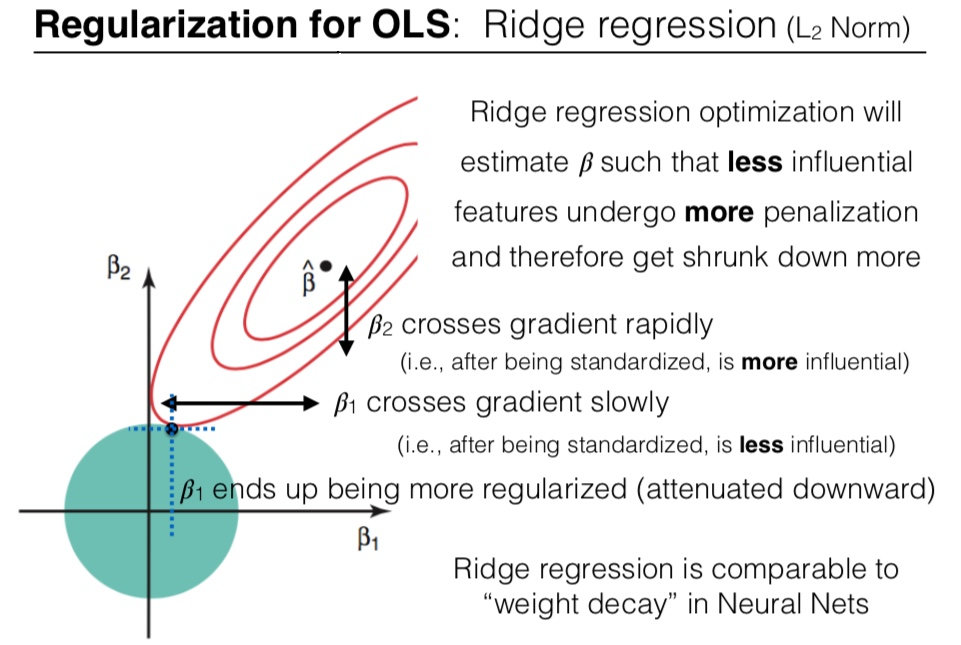

What is the effect of the regularization on the individual parameters and ?

Regularized optimization will estimate such that less influential features undergo more penalization and therefore get shrunk down more.

On the plot above, crosses the gradient more rapidly that (we can see this as the contour lines are less separated along the axis than along the axis). When both and are standardized, this means that is more influential than .

As a result, is more penalized by the L2-constraint than .

This phenomenon explains why we should normalize the features before using regularization. More details on this in what follows.

Effect when approaching 0

Since the gradient of vanishes around , the optimal will never move there if it was not already there. In other words: the parameters are pushed towards , but they are never set to .

Another regularization method, the L1 regularization has a different behavior: since the gradient around do not vanish, the parameters are pushed towards and may attain it and remain there. is.

Important remark about normalization

The regularizer term treats each component the same way:

Therefore it is important to unify the scale of each feature vector . Indeed, suppose that the optimal solution is for some design matrix where:

The estimate is:

If we rescale one column of the design matrix, say , then we don’t bring new information and the estimate should not change. The parameter is inversely rescaled:

And:

But the parameters and will be penalized with the same strength by the L2 regularization.

Therefore, to avoid mistaking influential parameters with non-influential ones because of scaling factors within the feature vector, we must normalize the features.

By norming the columns of , we put them on the same scale. Consequently, differences in the magnitudes of the components of are directly related to the wiggliness of the regression function , which is loosely speaking what the regularization tries to control.

TL;DR: before using regularization, transform your feature vectors into unit vectors.