The residual is the error vector between the true output vector and its estimate :

Residuals for linear regressions

When is produced by a linear model, the residual represent the aspects of that cannot be explained by the columns of the design matrix :

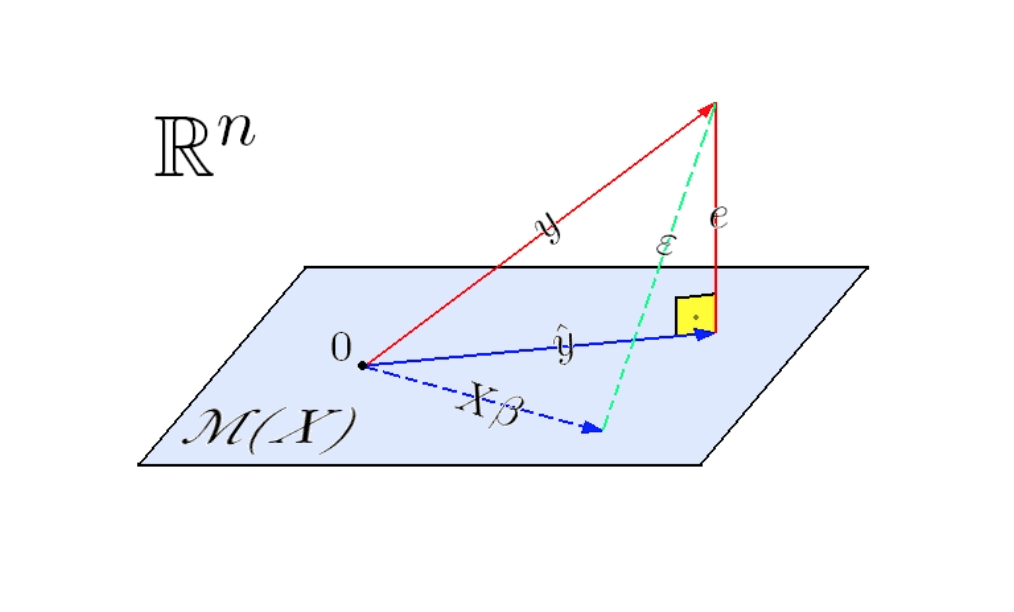

Since is a vector in the linear space spanned by the columns of , the residual is a vector outside of this linear space, pointing towards .

Note: and when the MSE-loss is used, the residual is orthogonal to this linear space.

Let be the column space of . The vector is in this linear subspace while isn’t. This is illustrated on the picture below.

.

.

Residuals for OLS

The parameter vector can be expressed using a closed-form formula in the case of an OLS regression;

Pluging this formula into the definition of a residual, we get:

is the hat-matrix (because it puts a hat on ). Rewriting the equality with as factor, we get:

As mentioned above, for OLS regressions, the residual is a vector orthogonal to the column space of .