A Logistic regression is a generalized linear model which is tailored to classification. In this article, we introduce this regression and explain its origin.

Setup

The dataset of study consists of pairs of input vector and output value :

We suppose that there exists an approximate deterministic relationship between the inputs and the outputs :

The goal of a logistic regression is to learn this relationship using a subset of the dataset.

Generalized Linear models

We will approximate the relationship using the combination of a deterministic function and a linear model. This means that we want to find the best model in the class of linear models such that:

The inverse link function

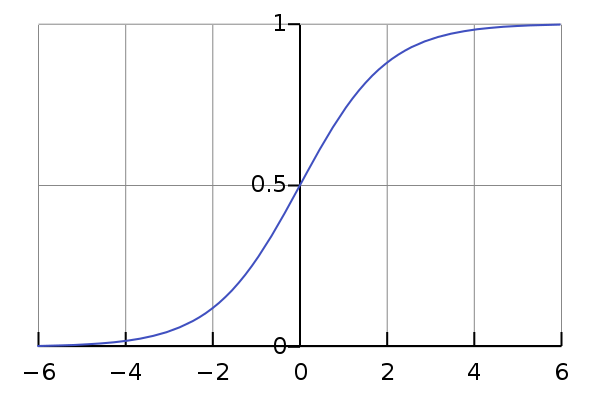

The deterministic function that we will use is the logistic function (we will explain why later):

Here is a graph of this function:

The logistic loss

To measure progress during learning, we use the logistic loss:

Learning the best model then amounts to minimizing the training objective

Origin of the logistic loss

As discussed in this article, usual regression models are ill-adapted to classification.

Logistic regression, however, is tailored to classification problems: instead of directly attempting to predict the label , predict the probability that is in class . That way, we turn a discrete classification problem into a continuous regression problem.

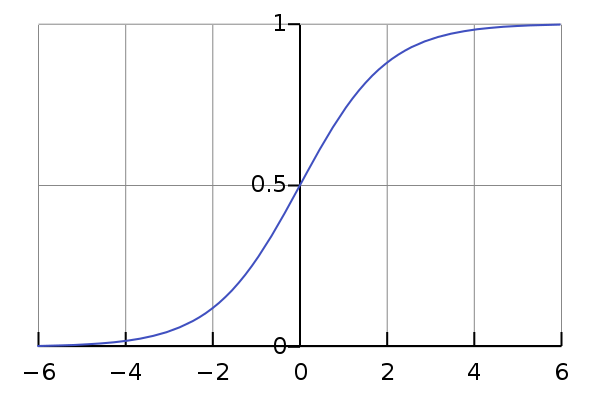

Since the values predicted by a regression model are in range , there only remains to find a way to continuously shrink this range to . This can be done using the logistic function , which is particularly interesting because most of the values it takes agglutinate around and :

Here is a graph of this function:

Using the logistic function, we get the following expression for the probablity that is in class :

And the probability that is in class :

To predict the labels, we compare those probabilities to a threshold ():

Learning the model’s parameters

So, our model predicts the probability that falls within class . How do we learn its parameter vector ?

We will maximize the likelihood to obtain our data. Assuming that each training example was drawn independently from the distribution , the joint likelihood is:

Which is maximal when the log-likelihood is:

Where I use the notation for function composition.

For each , we have:

Since:

We find that:

Since is a constant (the inputs does not depend on ), we find that the value to maximize is:

Replacing by its definition and simplifying, we find the expression to maximize:

Which is precisely . Hence its use as loss function.

But…

The choice of might seem arbitrary. Is it a good idea to predict the probability ? What if we used another function to shrink ?

The theoretical soundness of the logistic regression is explained in the following section.

Logistic regression is a generalized linear model

Logistic regression is a generalized linear model with inverse link function:

The output of the linear regression is an estimate of the natural parameter of a Bernoulli() distribution:

Underlying probabilistic model

The underlying probabilistic model when using a logistic regression is thus:

Let a random vector whose first component is always (this is our bias-term). Let be a vector and let be a Bernoulli random variable with probability of success .

Our dataset is made of i.i.d. samples from the random vector :

Hence, for each , we know that is an observation drawn from a distribution.

In this setup, the logistic regression predicts the value of the natural parameter so as to maximize the likelihood of the observed data.